codis(上)

Redis集群,Codis的安装和使用

项目上经过了多次合服和长达两年多的运营,单服玩家已经超过了600W,项目最原始的架构是将所有玩家的摘要数据在服务器启动时从数据库中读取,如果只有几万人或者及几十万人,启动速度基本上就是一两分钟的样子,没有什么压力,但是600W玩家的所有摘要数据全部加载需要的时间可能超过几十分钟,为此项目使用了Redis,将玩家摘要数据和排行榜数据全部都转移到了Redis中,我使用了Codis,基于go开发的redis集群,可以无限扩展Redis的内存大小。记录一下部署和使用Codis的全过程。

安装环境

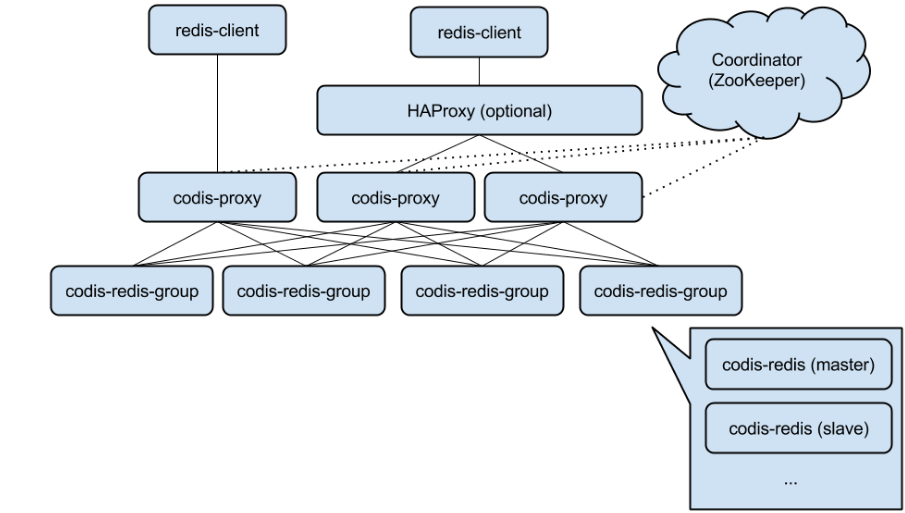

codis-proxy相当于redis,即连接codis-proxy和连接redis是没有任何区别的,codis-proxy无状态,不负责记录是否在哪保存,数据在zookeeper记录,即codis proxy向zookeeper查询key的记录位置,proxy 将请求转发到一个组进行处理,一个组里面有一个master和一个或者多个slave组成,默认有1024个槽位,其把不同的槽位的内容放在不通的group。codis是基于go语言编写的,因此要安装go语言环境。每台服务器安装java环境和zookeeper,zookeeper集群最少需要3台服务器。

安装 zookeeper

wget http://219.238.7.67/files/205200000AF1D7B9/mirrors.hust.edu.cn/apache/zookeeper/zookeeper-3.4.11/zookeeper-3.4.11.tar.gz

tar -xzf zookeeper-3.4.11.tar.gz -C /data/service

安装 java

tar zxf jdk-8u131-linux-x64.gz

mv jdk1.8.0_131 /usr/local/

安装 go 编译环境

# cd /usr/local/src

[root@node1 src]# yum install -y gcc glibc gcc-c++ make git

[root@node1 src]# wget https://storage.googleapis.com/golang/go1.7.3.linux-amd64.tar.gz

[root@node1 src]# tar zxf go1.7.3.linux-amd64.tar.gz

[root@node1 src]# mv go /usr/local/

[root@node1 src]# mkdir /usr/local/go/work

[root@node1 src]# vim /root/.bash_profile

export PATH=$PATH:/usr/local/go/bin

export GOROOT=/usr/local/go

export GOPATH=/usr/local/go/work

path=$PATH:$HOME/bin:$GOROOT/bin:$GOPATH/bin

[root@node1 src]# source /root/.bash_profile

[root@node1 src]# echo $GOPATH

/usr/local/go/work

[root@node1 ~]# go version

go version go1.7.3 linux/amd64

zookeeper配置文件

# 中使用的基本时间单位, 毫秒值.

tickTime=2000

# follower和leader之间的最长心跳时间 initLimit * tickTime 毫秒超时

initLimit=10

#

# follower和leader之间 同步数据的最大时间 syncLimit * tickTime 毫秒超时

syncLimit=5

# 数据目录. 可以是任意目录.

dataDir=/data/service/zookeeper-data/data

# log目录

dataLogDir=/data/service/zookeeper-data/logs

# 防止启动失败一个配置,不知道干啥的

quorumListenOnAllIPs=true

# 监听client连接的端口号.

clientPort=19021

# 客户端连接的最大数量。

#maxClientCnxns=60

# 维护相关的

#autopurge.snapRetainCount=3

#autopurge.purgeInterval=1

server.1 = 0.0.0.0:19022:19029

server.2 = 172.31.0.17:19022:19029

server.3 = 172.31.0.79:19022:19029

详细解释:

tickTime:这个时间是作为 Zookeeper 服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个 tickTime 时间就会发送一个心跳。

dataDir:顾名思义就是 Zookeeper 保存数据的目录,默认情况下,Zookeeper 将写数据的日志文件也保存在这个目录里。

clientPort:这个端口就是客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求。

initLimit:这个配置项是用来配置 Zookeeper 接受客户端(这里所说的客户端不是用户连接 Zookeeper 服务器的客户端,而是 Zookeeper 服务器集群中连接到 Leader 的 Follower 服务器)初始化连接时最长能忍受多少个心跳时间间隔数。当已经超过 5个心跳的时间(也就是 tickTime)长度后 Zookeeper 服务器还没有收到客户端的返回信息,那么表明这个客户端连接失败。总的时间长度就是 106000=60 秒

syncLimit:这个配置项标识 Leader 与 Follower 之间发送消息,请求和应答时间长度,最长不能超过多少个 tickTime 的时间长度,总的时间长度就是 56000=30 秒

server.A=B:C:D:其中 A 是一个数字,表示这个是第几号服务器;B 是这个服务器的 ip 地址;C 表示的是这个服务器与集群中的 Leader 服务器交换信息的端口;D 表示的是万一集群中的 Leader 服务器挂了,需要一个端口来重新进行选举,选出一个新的 Leader,而这个端口就是用来执行选举时服务器相互通信的端口。如果是伪集群的配置方式,由于 B 都是一样,所以不同的 Zookeeper 实例通信端口号不能一样,所以要给它们分配不同的端口号。

启动zookeeper

/data/service/zookeeper-3.4.11/bin/zkServer.sh /data/service/zookeeper-3.4.11/conf/zoo.cfg

安装部署codis

下载编译codis3.2版本

我直接使用了编译好的一个版本

codis配置文件

/codis/config/dashboard.toml

##################################################

# #

# Codis-Dashboard #

# #

##################################################

# Set Coordinator, only accept "zookeeper" & "etcd" & "filesystem".

# for zookeeper/etcd, coorinator_auth accept "user:password"

# Quick Start

#coordinator_name = "filesystem"

#coordinator_addr = "/tmp/codis"

coordinator_name = "zookeeper"

coordinator_addr = "172.31.0.84:19021"

#coordinator_auth = ""

# Set Codis Product Name/Auth.

product_name = "codis-warship"

product_auth = ""

# Set bind address for admin(rpc), tcp only.

admin_addr = "0.0.0.0:18080"

# Set arguments for data migration (only accept 'sync' & 'semi-async').

migration_method = "semi-async"

migration_parallel_slots = 100

migration_async_maxbulks = 200

migration_async_maxbytes = "32mb"

migration_async_numkeys = 500

migration_timeout = "30s"

# Set configs for redis sentinel.

sentinel_client_timeout = "10s"

sentinel_quorum = 2

sentinel_parallel_syncs = 1

sentinel_down_after = "30s"

sentinel_failover_timeout = "5m"

sentinel_notification_script = ""

sentinel_client_reconfig_script = ""

启动dashboard:

[root@node1 codis]# nohup ./bin/codis-dashboard --ncpu=1 --config=config/dashboard.toml --log=dashboard.log --log-level=WARN >> /var/log/codis_dashboard.log &

/codis/config/proxy.toml

##################################################

# #

# Codis-Proxy #

# #

##################################################

# Set Codis Product Name/Auth.

product_name = "codis-warship"

product_auth = ""

# Set auth for client session

# 1. product_auth is used for auth validation among codis-dashboard,

# codis-proxy and codis-server.

# 2. session_auth is different from product_auth, it requires clients

# to issue AUTH <PASSWORD> before processing any other commands.

session_auth = ""

# Set bind address for admin(rpc), tcp only.

admin_addr = "0.0.0.0:11080"

# Set bind address for proxy, proto_type can be "tcp", "tcp4", "tcp6", "unix" or "unixpacket".

proto_type = "tcp4"

proxy_addr = "0.0.0.0:19000"

# Set jodis address & session timeout

# 1. jodis_name is short for jodis_coordinator_name, only accept "zookeeper" & "etcd".

# 2. jodis_addr is short for jodis_coordinator_addr

# 3. jodis_auth is short for jodis_coordinator_auth, for zookeeper/etcd, "user:password" is accepted.

# 4. proxy will be registered as node:

# if jodis_compatible = true (not suggested):

# /zk/codis/db_{PRODUCT_NAME}/proxy-{HASHID} (compatible with Codis2.0)

# or else

# /jodis/{PRODUCT_NAME}/proxy-{HASHID}

jodis_name = "zookeeper"

jodis_addr = "172.31.0.84:19021,172.31.0.17:19021,172.31.0.79:19021"

jodis_auth = ""

jodis_timeout = "20s"

jodis_compatible = true

# Set datacenter of proxy.

proxy_datacenter = ""

# Set max number of alive sessions.

proxy_max_clients = 1000

# Set max offheap memory size. (0 to disable)

proxy_max_offheap_size = "1024mb"

# Set heap placeholder to reduce GC frequency.

proxy_heap_placeholder = "256mb"

# Proxy will ping backend redis (and clear 'MASTERDOWN' state) in a predefined interval. (0 to disable)

backend_ping_period = "5s"

# Set backend recv buffer size & timeout.

backend_recv_bufsize = "128kb"

backend_recv_timeout = "30s"

# Set backend send buffer & timeout.

backend_send_bufsize = "128kb"

backend_send_timeout = "30s"

# Set backend pipeline buffer size.

backend_max_pipeline = 20480

# Set backend never read replica groups, default is false

backend_primary_only = false

# Set backend parallel connections per server

backend_primary_parallel = 1

backend_replica_parallel = 1

# Set backend tcp keepalive period. (0 to disable)

backend_keepalive_period = "75s"

# Set number of databases of backend.

backend_number_databases = 16

# If there is no request from client for a long time, the connection will be closed. (0 to disable)

# Set session recv buffer size & timeout.

session_recv_bufsize = "128kb"

session_recv_timeout = "30m"

# Set session send buffer size & timeout.

session_send_bufsize = "64kb"

session_send_timeout = "30s"

# Make sure this is higher than the max number of requests for each pipeline request, or your client may be blocked.

# Set session pipeline buffer size.

session_max_pipeline = 10000

# Set session tcp keepalive period. (0 to disable)

session_keepalive_period = "75s"

# Set session to be sensitive to failures. Default is false, instead of closing socket, proxy will send an error response to client.

session_break_on_failure = false

# Set metrics server (such as http://localhost:28000), proxy will report json formatted metrics to specified server in a predefined period.

metrics_report_server = ""

metrics_report_period = "1s"

# Set influxdb server (such as http://localhost:8086), proxy will report metrics to influxdb.

metrics_report_influxdb_server = ""

metrics_report_influxdb_period = "1s"

metrics_report_influxdb_username = ""

metrics_report_influxdb_password = ""

metrics_report_influxdb_database = ""

# Set statsd server (such as localhost:8125), proxy will report metrics to statsd.

metrics_report_statsd_server = ""

metrics_report_statsd_period = "1s"

metrics_report_statsd_prefix = ""

启动代理

[root@node1 codis]# nohup ./bin/codis-proxy --ncpu=1 --config=config/proxy.toml --log=proxy.log --log-level=WARN >> /var/log/codis_proxy.log &

/codis/config/redis-6380.conf redis的配置主要是改这几个

bind 172.31.0.84

port 6380

pidfile "/tmp/redis_6380.pid"

maxmemory 12G

dir "/data/service/codis"

logfile "/tmp/redis_6380.log"

通过codis-server指定redis.conf文件启动redis服务,不能通过redis命令启动redis服务,通过redis启动的redis 服务加到codis集群无法正常使用:

[root@redis1 codis]# ./bin/codis-server ../config/redis_6380.conf

使用codis的网页服务器配置codis服务器

启动codis-fe

nohup ./bin/codis-fe --ncpu=1 --log=fe.log --log-level=WARN --zookeeper=172.31.0.84:19021 --listen=172.31.0.84:8060

以上配置是我在实际使用中的配置,官方的教程在这里:

https://github.com/CodisLabs/codis/blob/release3.2/doc/tutorial_zh.md